So much for spring

/I took this picture yesterday, when we had a brief burst of sunshine. I had a look the flowers today, after the weather returned to grey wet misery and they have closed up again.

Rob Miles on the web. Also available in Real Life (tm)

I took this picture yesterday, when we had a brief burst of sunshine. I had a look the flowers today, after the weather returned to grey wet misery and they have closed up again.

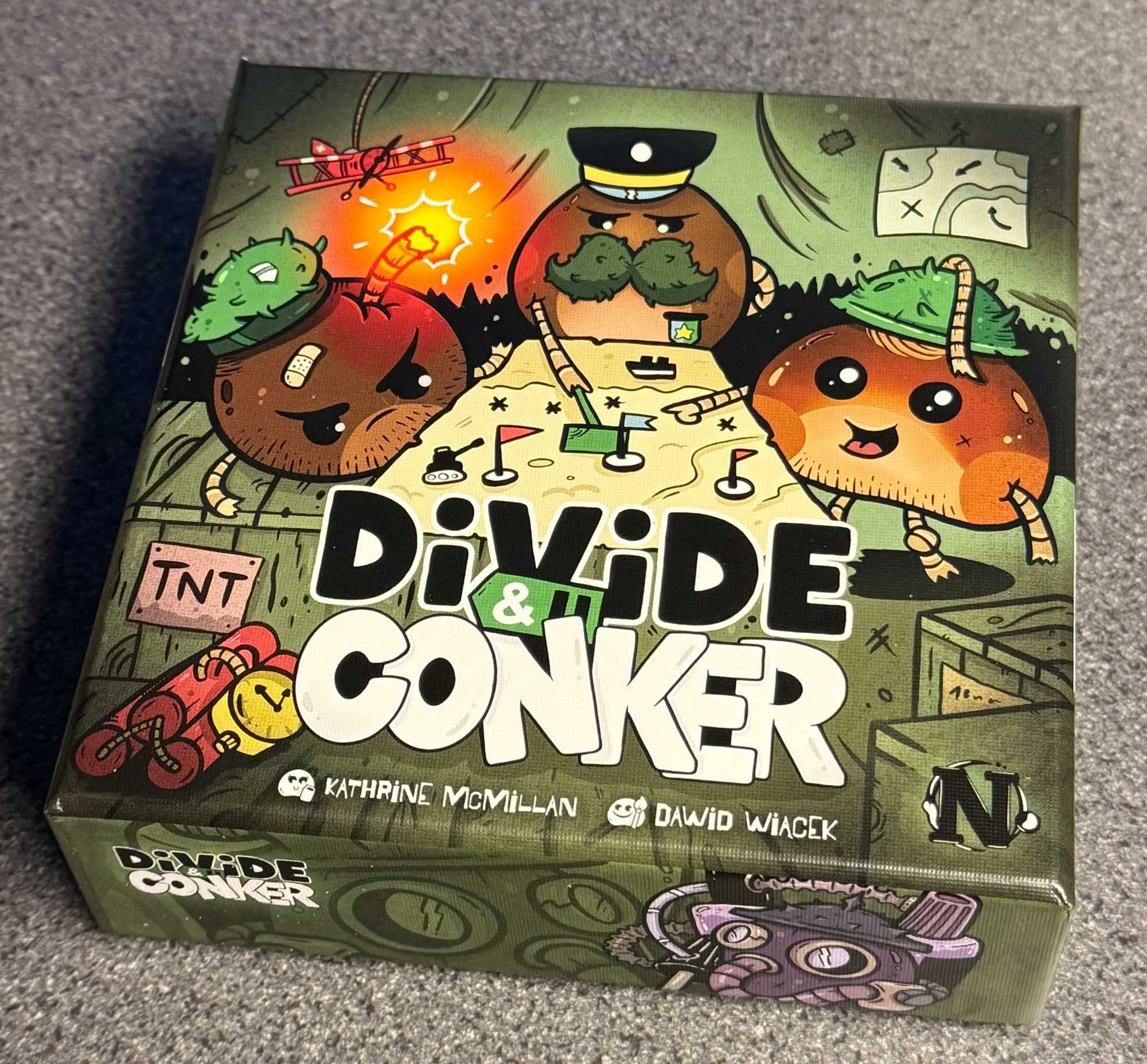

We played Divide & Conker at Tabletop Gaming Live today. It’s a lovely little four player game that hangs on the English tradition of getting a conker, putting a shoelace through it and then bashing it against other conkers In a bid to be last one unbroken.

Just like in real-life, you can use all kinds of strategies to improve your chances. You can bake your conker, use a stronger lace and whatnot. Once you’ve picked your contender you take it down to the park to do battle. Then, in the course of series of dice-powered rounds you discover what you are really made of. In my case it wasn’t much. Oh well.

A lot of the fun comes from the beautifully illustrated cards, the wacky weapons and the different conker personalities on show, but the underlying gameplay is nicely structured too. If you fancy a game where you can really take it out on your opponents and where a lucky streak can suddenly turn the tables, then you will love it.

Oh, and the gaming show was wonderful. It was at Doncaster Racecourse, which turned out to be a splendid venue. Just the right balance of interesting stalls and space to play with your newly acquired games. I hope they run it again next year. If they do, we’ll be there with our conkers at the ready…

The unit on the right will never light up green

I’ve spent the last couple of days working on some Bluetooth code to remotely control a Polaroid camera. I’ve got the code working and now I’m looking for suitably small units to host it. I’ve always been a fan of the M5Stack Atom device, particularly the one with the 25 led dot matrix display. I’ve now got the software working a treat, and so I thought I’d add battery power for a properly mobile experience. I got a couple of M5 external batteries, the Tailbat on the left and the Atomic Battery Base on the right. Both work fine, but unfortunately the Atomic base seems to block the Bluetooth signal. Wah.

It does look super cool

The instax mini Evo Cinema is a strange camera. And I quite like strange cameras so I got hold of one to play with. If you are the right age, you might think it looks a lot like an olde school home movie camera. And it takes genuine olde school movies as digital files. The viewfinder can be removed, exposing a small lcd touch screen that serves as the viewfinder and control input.

The big knob on the right hand side of the camera (known as the “eras” selector) lets you pick a decade and then the camera approximates the look and sound of cameras from that time, from black and white movies through old TV, home movie, video cassette and YouTube channel. You turn the ring around the lens to vary the intensity of the effect and a switch lets you add a border effect from that era as well.

Even I’m not old enough to have been around in some of the eras you can select, but the looks are always interesting and extend to the soundtrack and the image disruption that you get when you tap the camera during shooting. You can shoot scenes can last up to 15 seconds. If you prefer you can switch to still pictures, and these still retain the personality of the era.

The video quality is not great (although it reflects the quality of the time). If you turn the effects up to max you will have difficultly recognising people. Around half way works best in my opinion. If you select 2020 you have the option to double the image resolution but the quality won’t complete with your smartphone unless it is over ten years old. The camera does have another trick, You can print out pictures or still shots from a movie on the instax mini printer which is part of the camera.

If you connect your phone to the camera you can transfer movies and stills. One pro tip: Fuji use the same instax mini app for both the instax mini evo and the new cinema camera. You need to change the mode of the app in the settings to match your camera type or you will spend a while faffing around when the camera fails to connect. In the usual Fuji tradition you can only transfer clips and pictures that you’ve printed. This is super annoying, but of course you can take out the micro-SD card (which you have to buy and plug into the camera) and load all the files straight from that.

Once you’ve uploaded things to your phone you can assemble videos and upload them to the internet. You can print out a still picture with a title and a QR code which takes you to a page where you can view and download the video. Fuji will host the video for two years. This is quite nifty and would be super at parties and weddings, but I’d swap it in a heartbeat for an app which was more responsive, didn’t contain lots of unnecessary animations and used Wi-Fi rather than Bluetooth to move things around more quickly.

The camera is well made and fun to handle. The pictures and movies it makes are full of character. However, it is very slow to use. Switching eras takes a lot longer than it should. After you’ve taken a clip you are asked to confirm that you want to keep it. This is super annoying and adds no value to using the camera. And you can’t turn it off. The battery life is pretty poor. You’ll need to take a battery pack with a USB C cable if you want to film for more an an hour or so.

Would I recommend it? Tricky question. It does things that nothing else can do, although your smartphone might come close with the right filters. All the controls are wonderfully tactile and the sensation that you are using a machine from the past is well realised. And it is great fun to play with - if somewhat infuriating at times. I really wanted to love it, and perhaps I will grow to with more use. But as it is, I’d strongly advise you to have a play with one before parting with any cash.

I enjoyed this article by Martin Rowson: “I asked AI to name my wife. To the hopelessly incorrect people it cited, my deepest apologies” but I fear he may be missing the point. I wouldn’t consider a new acquaintance stupid if they couldn’t name my wife, but I would worry a bit if they didn’t know what a wife was or asked me to explain marriage.

Conversational AI is built around plausible guesses designed to keep the conversation going, not absolute facts. In this respect the AI seems to be working well. I use AI a lot to help me write software, but I don’t mind that it doesn’t know who my wife is. In fact, I work with lots of other folks in the same position.

I regard AI as a useful tool that increases my efficiency and lets me realise my ideas in areas where I have limited skills. I thought I might enhance this post with a cartoon example of what might happen if a person or an AI confused “wife” with “wine” and I asked ChatGPT to illustrate this with a cartoon “in the style of Martin Rowson”.

I was pleased when it refused to do this owing to content guidelines. I was less pleased when it helpfully suggested weasel words to get around this restriction. But then these didn’t work either. We went back and forth for a while and eventually I congratulated ChatGPT on how well it was protecting the interests of artists.

I then asked if it would refuse to write “in the style of Rob Miles” if anyone asked. It said it would not reject such a request. Apparently images and words are treated differently. If I had learned to draw my output would be safer against AI impersonation. Oh well. I eventually got my image by asking it to draw two penguins at a party in an ice palace.

The dangers of confusing “wife” with “wine”. The penguin on the right is saying ‘I’ve got lots that I keep in a cellar under my house"‘

If you are interested you can read the entire exchange here.

According to the dating app which keeps pestering me I’ve got Anya(46) from Concord +23 others all waiting for me. I’m not sure that I need another 24 women in my life just now though.

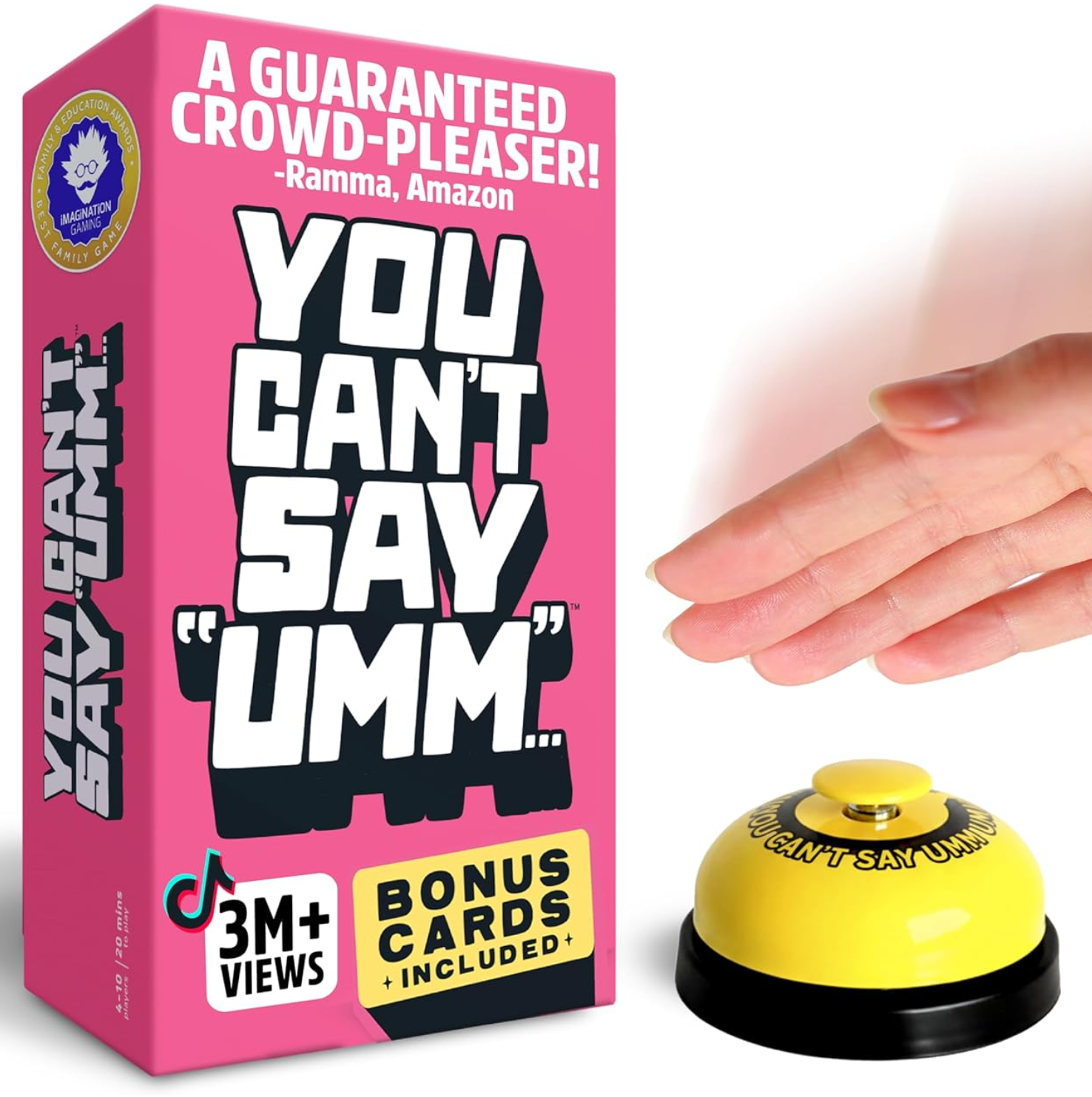

Don’t say Umm is a fun game which where you have to describe random two word phrases to your team-mates without saying um. As the game progresses other restrictions are imposed, like not using words starting with “O". The other team will be watching you carefully and ringing the little bell (and gaining a point) when you slip up.

I’m spectacularly bad at the game. In one turn I would have been more successful if I’d just said nothing. I did enjoy using the bell on the other team though….

I think if I had to play it for more than an hour or so my brain would start coming out of my ears, but for a quick blast it is a hoot.

We were watching the Winter Olympics today. The luge, a terrifying device that you lie on to hurtle down a tunnel of ice. The commentator said that the difference between winning and losing would be down to just a few thousandths of a second. “That’s nothing” I said. “If I took part I’d make the difference much larger than that. “

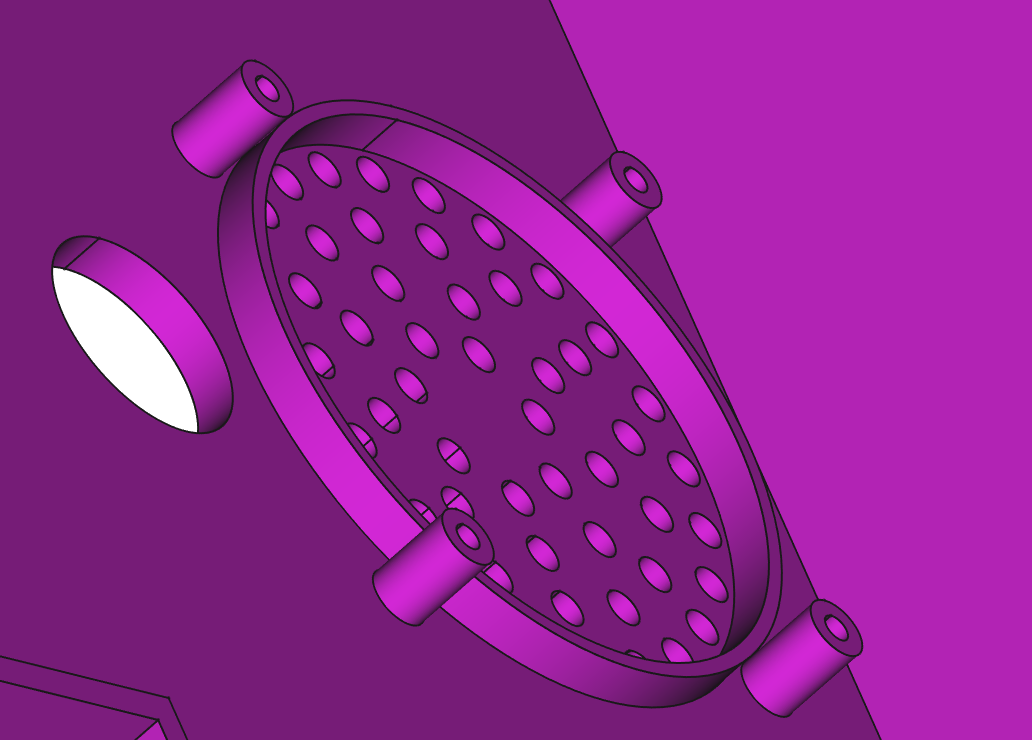

The box above took over three hours to print. It’s perfect in every way except one. I’m going to have to print it again. The problem is with the speaker fitting at the bottom of the box. The speaker fits into the circle and is supported by four pillars. But the pillars are too high.

You can see the problem here. If I put the speaker on top of the pillars there will be a gap between the speaker and the hole it fits in. This makes it sound awful. To understand why we have to learn about about how speakers work.

The cone in a speaker goes backwards and forwards, pushing the air in front of the speaker to make sound waves we can hear. We really don’t want to hear anything from the back of the speaker because, although it is also sound, it is going the “wrong way”. The sound term for this is “out of phase”. When air in front of the speaker is being pushed forwards, air at the back is being pulled. If sounds from the front and the back meet up they can interact in ways that don’t sound nice.

Some speakers use carefully designed boxes which take the out of phase sound from the back of the speaker and reflect it in some way to invert the phase so that it adds to the sound. Other speakers solve the problem by putting the speaker in a sealed box from which the sound from the rear of the speaker can’t escape. These are called “infinite baffle” speakers. If you call the thing that we put the speaker into a “baffle” (which sound people do) you can see that an infinitely large baffle would stop any sound from the back of the speaker getting to the listener.

I’m trying to make my device use the infinite baffle principle. That’s why I have the circle that the speaker fits into and seals against. However, if I have a gap between the front of the speaker and the baffle I get sound leakage from inside the box and the speaker sounds rubbish.

So that’s why I reduced the height of the pillars and printed the box again. It makes a surprising difference to the sound. As to why I got this wrong in the first place: I made all the pillars in the box the same height, then I increased the height of the pillars that support the PICO In the middle of the board and that made the speaker pillars higher too.

Update: It’s just occurred to me that I have a hole in the back in the form of the power cable entry. I might convert that into a socket (or add a seal) and see if that makes it sound even better.

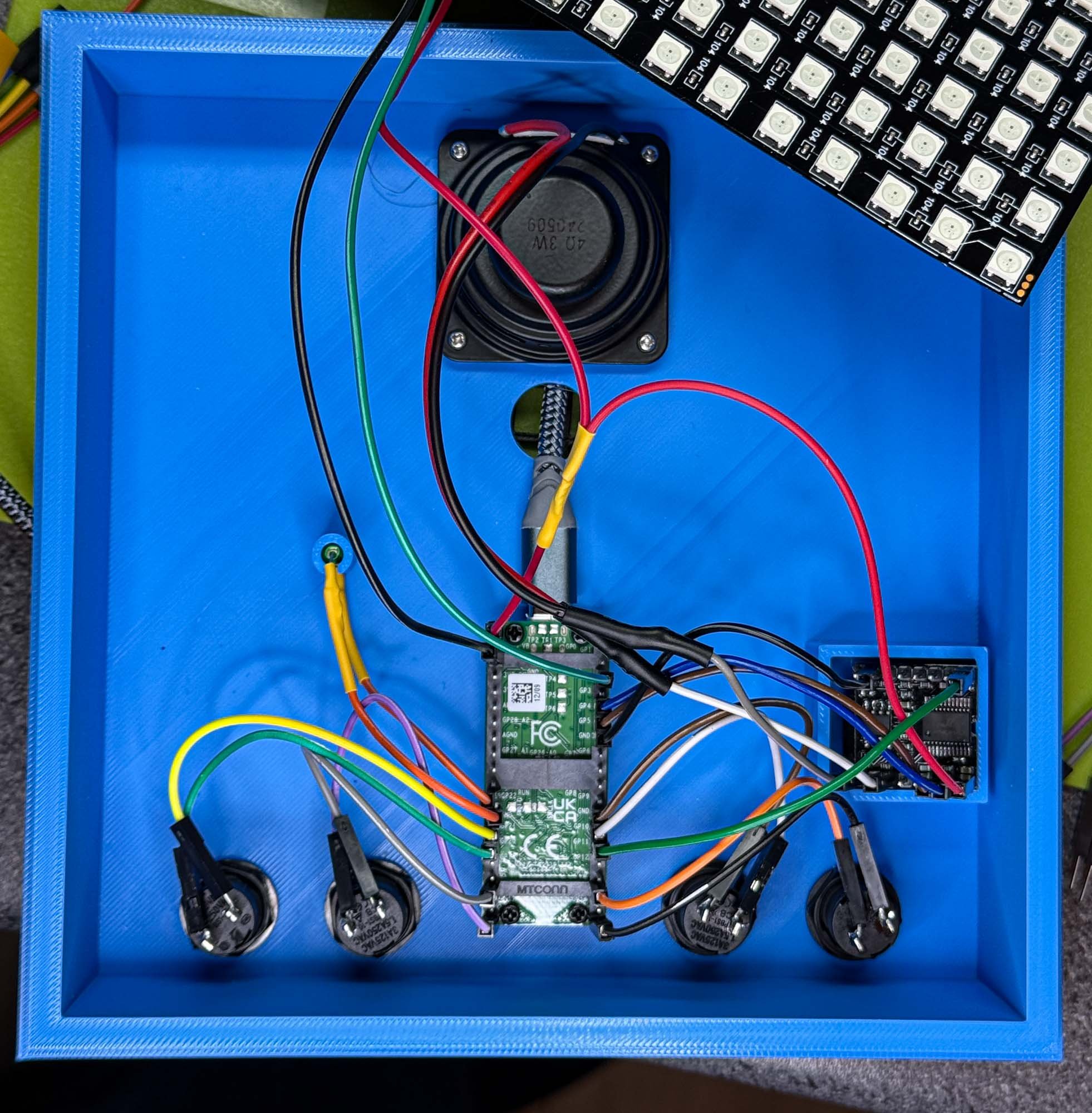

It’s called the “Dead Cockroach” approach. The chip in the middle is the cockroach lying on its back, and you wire directly onto the pins. It’s a good idea if haven’t got the time or inclination to make a printed circuit board and you don’t mind fiddling around a bit. The device worked first time, which was nice. I’ll tell you what it is later..

Sharing a joke together

We didn’t have a quiet hardware meetup, the Furbies saw to that. Brian had three of them under the control of his software. We’re looking into downloading extra assets into them so that we can build a “Furbie Orchestra”. Code was written, bugs squashed and the Furbies sang a duet. Good times.

The next meetup will be in two weeks.

I’ve just invented the square tennis ball. I think it will be a real game changer.

I’m writing some code to drive a tiny mp3 player. These things are awesome. You can get them for a couple of quid each, pop in an SD card with some files on it and off you go. I’ve used them before, but I wanted a MicroPython driver to go with the Connected Little Boxes framework I’m building.

ChatGPT made me the driver, and helpfully included a demo program. I dropped it all in place and it worked, except that the music glitched after it started. This was a worry, so I spent a moment looking for power supply problems and checking the MicroSD card I was using. Then I took a proper look at the demo code that had been written for me:

df.play_track(1)

time.sleep(2)

df.pause()

time.sleep(1)

df.play()Oh ha ha. The demo program pauses the playback and resumes it, giving me a glitch. ChatGPT was unmoved when I pointed this out. It told me it was just showing me another feature of the code…

The team admiring their handiwork.

The Spooky Elephant team was created a while back. We must be a proper team because we’ve got shirts and a logo. Anyhoo, we finished working on our Global GameJam game today. You can find it here. It’s worth seeking out just for the title sequence.

University of Hull in “STill Looks Awesome” shock

I’ve not done a Global Game Jam for a while. But this morning finds us in Fenner Lab on the University campus thrashing out ideas and getting to work. The theme for the competition is “Mask” and we’ve decided that we’re going to do bitmask themed take on a falling block game. I’ve taken it upon myself to do the sound/music. This removes me from complex gameplay discussions and means that if my bit doesn’t work the game will still run. Double win.

We’re using a game engine called Godot. I’ve never used it before. After a day spent playing with it I reckon it is really rather splendid. You write your scripts in a language a bit like Python using an IDE built into the engine. This is the first time in a long while that I’ve written code in something other than Visual Studio Code. I quite like it. The syntax error highlighting is neat and once you get your head around how things are organised it all works very well. It is also very easy to create a web-based version of your game. If you want to know more about what we got up to, and hear my very primitive sound stuff you can find it here. The game doesn’t do much at the moment, but it runs and that’s a start.

Base Camp

We’ll be back in the lab tomorrow adding the finishing touches.

I’m doing a game jam this weekend. What could possibly go wrong?

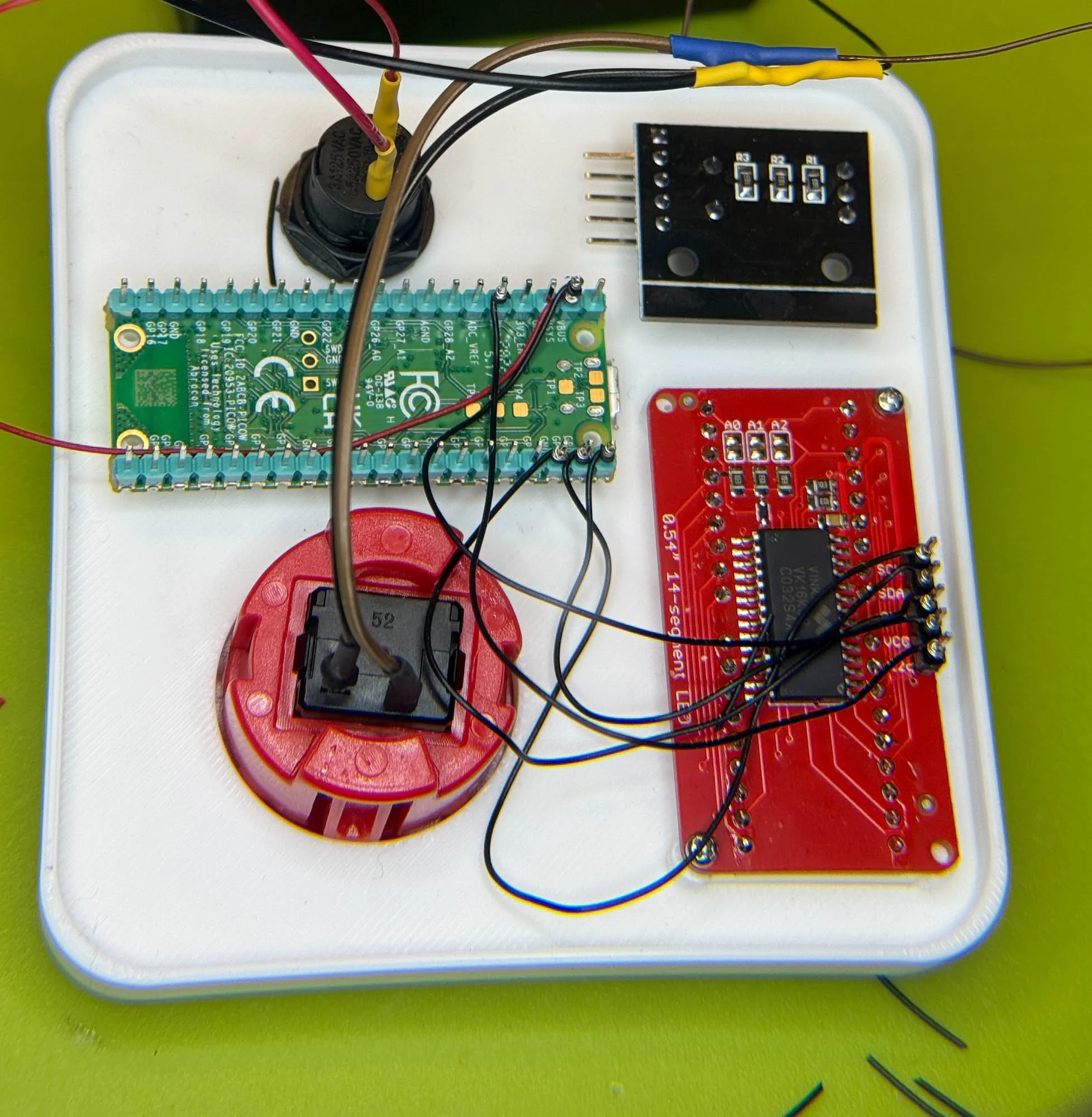

Behind the magic

I’m wiring a big red button onto a PICO. Above you can see the work in progress. Wiring buttons is easy, you just wire the button switch between an input pin and one of the many ground pins on the PICO. It should just work . Unless you’ve used AI to write the code (like I did) and forgotten to tell the AI to enable the internal pullup on the input pin. “What’s an internal pullup?” I hear you ask (actually I don’t, but let’s keep moving forwards).

Well, the principle of the circuit is that when I press the button it connects the input pin to the ground level, causing the input to go low. But that pre-supposes that the input pin is set high before the button is pressed. One way to do this to is to fit a fairly high value resistor (perhaps a few thousand ohms) between the input pin and the power rail. That works, but it means you have to find a resistor and solder it in place. A better way is to use an “internal pullup”. This is a switched resistor inside the microcontroller which you can turn on to pull the pin high. They are terribly useful, but only if you enable them.

pin = machine.Pin(pin_number, machine.Pin.IN, machine.Pin.PULL_UP)

You request an internal pullup by adding machine.Pin.PULL_UP to the constructor for the pin. If you do have a pullup resistor on your board (some hardware makers do this) then you should turn off the internal pullup to stop the two of them fighting…

AT least I managed to get out and take some pictures

Just spent a day paying off Technical Debt. Technical Debt is caused by doing the expedient thing rather than the right thing. It’s a project development term for putting things in the loft rather than taking them to the tip. In this case the Technical Debt was accrued by telling the AI to write some code and then assuming that it had done the right thing… Anyhoo, things that I didn’t realise were broken are now all working. Which has got to count as progress I suppose.

You can now register here for the conference. Free to attend, the food is great, the environment wonderful and I’m doing a session. So, that’s two out of three….

Rob Miles is technology author and educator who spent many years as a lecturer in Computer Science at the University of Hull. He is also a Microsoft Developer Technologies MVP. He is into technology, teaching and photography. He is the author of the World Famous C# Yellow Book and almost as handsome as he thinks he is.